It is and we have actual computers, but we don’t yet have a way to use them effectively. You run something, smoke comes out, you fix, recalculate and run again. We don’t have all the fancy abstraction that we have today, developers need to account for the speed at which the drums rotate.

Previously we covered how we can run mathematical equations on an abstract machine called “automata”. We reached the “edge of the map” for the types of automata we need, but not what implementations. We now have the knowledge that all we need is a Turing Machine, but it is impossible to build.

Our main constraint is memory size, IRL it will be a constant (not ) but they should be able to do all that a Turing Machine can. We call this Turing-Equivalence or a universal computer. Let’s replace the infinite tape of a TM with a fixed number of uniquely labeled registers, and let’s say it can have any non-negative integers i.e. . How this machine works will be covered below, but we can call this the register machine. Since this is an automaton it will come with a set of production rules i.e. the things it can do. The permissible set of operations is known as the instruction set.

Remember it is , computers are considered calculators, to be a useful language this automaton should be able to do some arithmetic and let me go around the program i.e. control flow. Based on the different ways to program and store it we get three machines.

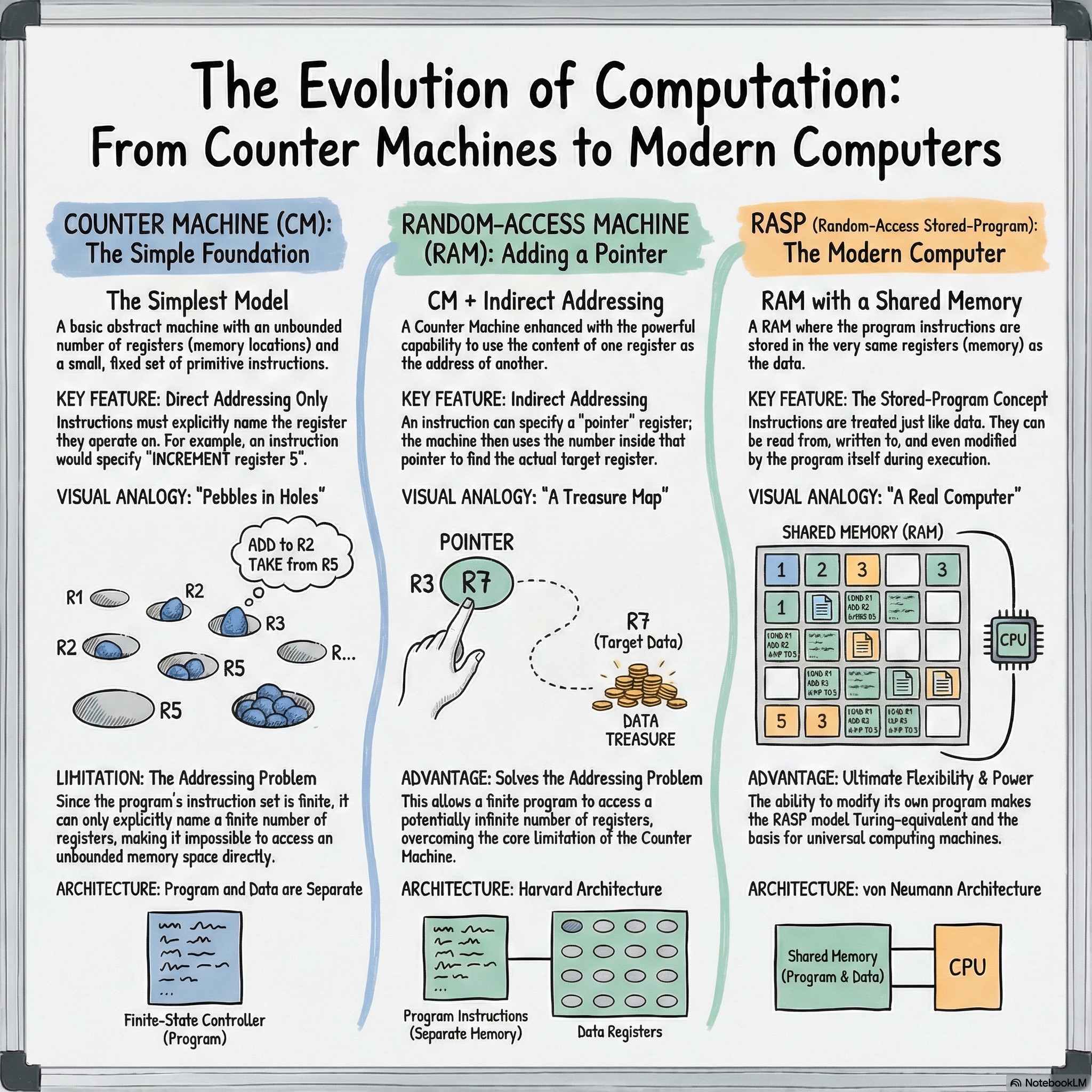

Counter machine (CM) → A register machine that contains a super minimal instruction set, one with only commands. increments value in register by , reduces value by or stays at and, which is a control statement telling the computer to jump to instruction if is . As you can see, each operation requires the precise register ID; there is no way to refer to a register i.e. no indirect addressing. One more instruction Halt () was added in .

These are very limited resources, so we are not going to reason how to build a language. Despite its primitive nature, the register counter machine (CM) is proved to be Turing complete as demonstrated by Minsky using Gödel encoding. Read this chat, to learn how to add +.

The core limiting factor for CM is that it cannot access unbounded memory, since each operand has access to only that register. But that is a theoretical limitation, we can still go ahead and make a chip for CM. The program is stored separately from the data, this is known as Harvard Architecture.

Random Access Machine (RAM) → Not to be confused with Random Access Memory. This machine allows using a value in a register as an address to another register, think pointers in C. It gives rise to data structures such as linked lists, etc and allows for more complex instructions such as addition and multiplication as a single step. However this is still Harvard Architecture.

RAM solves the core problem of CM, by providing infinite memory, but this is fallacy, RAM is a made up theoretical machine to help us understand things better. It has infinite memory, and also each slot can contain a number up to infinity. Why do we use this, say we want to prove merge sort works for numbers, .

Random Access Stored Program (RASP) Machine → Computationally this is as powerful as a RAM i.e. it can’t do anything RAM cannot do. However it stores the program and data in the same place, making it Von-Neumann architecture. Since it can modify itself, it acts like the universal TM.

In a TM to get to the -th cell, you must move the head steps (). In RASP we assume instant access i.e. . In reality though, world is a more complex data structure. Moving things around takes in a graph. In a Pointer machine, if you have a direct pointer to a node. It sits between CM and RAM. Instead of doing arithmetic on the address space to move around, we imagine the computer walking on a graph (web) of registers.

In the real world READ is expensive as machines keep wearing out. RASP is important because it is the theoretical model that solves READ by providing a single memory. Thus RASP is the architecture of the modern computer. Here’s a table comparing the automata to computers.

| Theoretical Model | Practical Hardware Equivalent |

|---|---|

| RASP | General Purpose CPUs (x86, ARM). They treat memory as a giant array of numbers where code and data mix freely. |

| Pointer Machine | Object-Oriented Runtimes (JVM, Python). While they run on RASP hardware, the "virtual machine" behaves like a pointer machine, managing a "heap" of connected objects. |

| Register Machine | ASICs & FPGAs. These often have fixed paths for data to flow between specific registers without a complex "pointer" system or a shared memory pool. |

One pattern emerges, in both Chomsky’s Hierarchy and Register Machines adding better memory strategy helps solve more complex problems.

CSE is a subject where theory often follows discovery and engineering. In the early th century electrical engineers figured out how Boolean Algebra (Boole ) maps very well to electrical circuits. Claude Shannon, computer scientist (biologist 😀) formalised the theory. It turns out we can’t build computers with characters, but we can with two and . ENIAC, IBM , Macintosh, mobile phones, SoC, robotics, space datacenter are all a continual trend built on just type of Universal-TM that can simulate billions of software Universal-TM.

Modern computers are nothing less than a miracle technology.

But this is neither the real nor the only path.

In # Diverse Automata we go over many many different types of computers whose ideas helped us get to where we are.

Important Links

- [2]Minsky 1967

- [3]Boole 1847

The opinions expressed herein are solely those of the author in their individual capacity and do not necessarily reflect the official policy or position of any current or former employer, client, or affiliated organization. Suggest changes.